Protecting Data Privacy, Preventing IP Leakage, and Preserving Trust

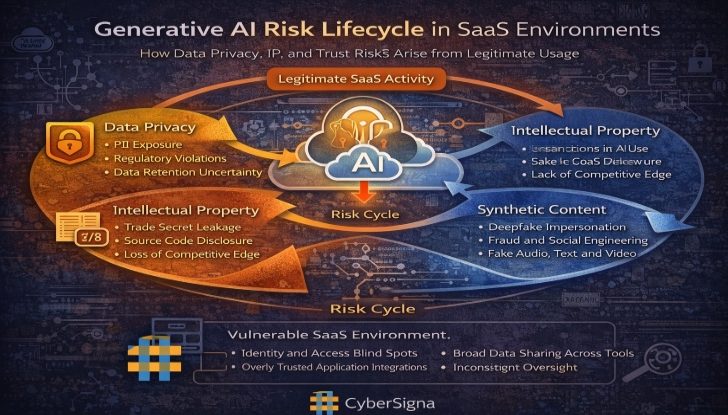

Generative AI is no longer a standalone tool used by a handful of early adopters. It is rapidly becoming an embedded capability across SaaS platforms, woven directly into productivity tools, collaboration systems, and business workflows. As AI driven functionality becomes a default feature rather than an opt in choice, organizations are exposed to new categories of risk that arise from legitimate use, not malicious activity.

In SaaS environments that are already highly interconnected, user driven, and identity centric, GenAI acts as a powerful risk multiplier. Sensitive data, intellectual property, and trusted communications can be exposed, transformed, or misused through everyday workflows, often beyond the visibility of traditional security controls. What looks like normal productivity can quietly become a material risk.

Why GenAI Fundamentally Changes SaaS Risk

SaaS platforms are designed to remove friction. They encourage rapid adoption, easy sharing, and seamless collaboration across teams and tools. GenAI amplifies these characteristics by enabling users to submit, generate, and redistribute large volumes of information almost instantly.

As a result, AI capabilities are frequently enabled by default, difficult to constrain without affecting productivity, and capable of propagating data far beyond its original context. Inputs and outputs move quickly across applications, users, and workflows.

Unlike traditional security threats, GenAI risk does not require a breach. It emerges inside approved platforms, through authenticated users, and within sanctioned business processes. The threat model shifts from intrusion to exposure through normal use.

GenAI as an Implicit SaaS Capability

Generative AI is transitioning from a differentiating feature to a baseline expectation in SaaS applications. This evolution carries important security implications. Organizations may inherit AI driven risk without making explicit adoption decisions. New AI features may appear incrementally through updates, and meaningful opt out controls may be limited or unavailable.

Over time, exposure to GenAI becomes structural rather than optional. Risk is introduced not by choosing to deploy AI, but by continuing to use modern SaaS platforms.

Core Risk Areas in AI Enabled SaaS

Data Privacy and Regulatory Exposure

GenAI systems process user supplied inputs that may include personal data, regulated records, or confidential business information. Within SaaS environments, this data can persist beyond its intended lifecycle, be processed across jurisdictions, or become visible through collaboration and sharing features.

A central challenge is the lack of transparency around how AI systems store, reuse, or learn from submitted data. Even when usage is well intentioned, uncertainty around data handling creates governance and compliance risk that is difficult to assess or audit.

Intellectual Property Leakage

Employees increasingly rely on GenAI to draft documents, generate code, summarize contracts, and analyze internal material. When proprietary information is entered into AI enabled workflows, trade secrets, source code, designs, and strategic insights may be unintentionally disclosed or reused in ways that erode competitive advantage.

This risk is rarely driven by malicious intent. It is driven by convenience, speed, and the normalization of AI assistance in daily work. As a result, traditional enforcement and awareness controls often fall short.

Prompt Manipulation and Indirect Exposure

GenAI systems respond to inputs in ways that are probabilistic rather than deterministic. Crafted prompts can influence outputs, surface unintended information, or alter system behavior. In shared SaaS workflows, this can result in the leakage of internal context, misleading or inaccurate outputs, or unintended disclosure through automation.

These risks do not stem from technical compromise, but from misplaced trust in AI generated content and assumptions about its boundaries.

Deepfakes and Synthetic Content

Advances in generative AI now allow for highly realistic synthetic text, audio, and visual content. Within SaaS platforms, this capability introduces new forms of risk, including impersonation of executives or trusted users, fraudulent approvals, and scalable social engineering conducted through legitimate communication channels.

As synthetic content improves, traditional signals of authenticity become less reliable. Trust in digital communication, once assumed, must now be actively verified.

Why GenAI Risk Is Hard to Detect

GenAI driven risk is difficult to identify because it hides in plain sight. Activity occurs entirely within legitimate SaaS platforms. Inputs and outputs resemble normal work. AI behavior varies based on context and interaction. Traditional security tools remain focused on endpoints, networks, and files rather than data usage and intent.

What appears to be productivity is often indistinguishable from exposure.

Governance Gaps and Shadow Usage

GenAI adoption follows the same bottom up pattern that characterized SaaS adoption itself. Users experiment independently. Policies lag behind behavior. Oversight is uneven.

Restrictive bans may temporarily reduce visible risk, but they are rarely sustainable. Employees will continue to use AI capabilities outside sanctioned channels, shifting risk rather than eliminating it. The challenge is not stopping AI use, but governing it responsibly.

Identity and SaaS Configuration as Risk Amplifiers

GenAI does not introduce risk in isolation. It magnifies existing weaknesses in SaaS environments, particularly around identity, access, and configuration. Over permissive sharing, long lived integrations, and poor visibility into connected applications all increase the blast radius of AI driven exposure.

In practice, AI features inherit the trust and access models of the platforms they are embedded in. Weak foundations become more consequential.

Why Perimeter Based Controls Fall Short

GenAI risk manifests inside cloud platforms, beyond the reach of traditional perimeter, endpoint, and network defenses. Blocking tools or scanning files is insufficient when risk arises from legitimate data usage by authorized users.

Effective control depends on understanding how data flows through AI enabled workflows, how outputs are shared, and how behavior deviates from intent. Monitoring behavior matters more than detecting binaries.

Governing GenAI Risk in SaaS Environments

Managing GenAI risk requires a shift from prevention to governance. Organizations must define what constitutes acceptable AI usage, educate users on data sensitivity and AI limitations, review AI capabilities in high risk workflows, continuously assess SaaS posture, and assign clear ownership for AI related risk.

GenAI should be treated as a data processor embedded within business operations, not as a neutral or infallible assistant.

Strategic Implications

GenAI adoption is accelerating faster than most security and governance frameworks. Organizations that fail to adapt may face regulatory exposure, intellectual property loss, fraud and impersonation incidents, and erosion of trust in digital systems.

GenAI raises the bar for SaaS security maturity. It rewards organizations that understand their data, identity, and application ecosystems, and penalizes those that rely on outdated assumptions about control and visibility.

Key Takeaway

GenAI risks in SaaS environments are not hypothetical and not edge case driven. They arise from everyday use, trusted platforms, and well intentioned users.

Protecting data privacy, preventing IP leakage, and preserving trust require intentional governance, visibility, and accountability, not blanket bans or reactive controls. In a SaaS first world, responsible GenAI adoption is inseparable from strong SaaS security posture.

CyberSigna Analyst Note

GenAI does not replace existing SaaS risks. It amplifies them. Organizations that truly understand their SaaS ecosystem and identity landscape will be best positioned to harness AI safely and sustainably.