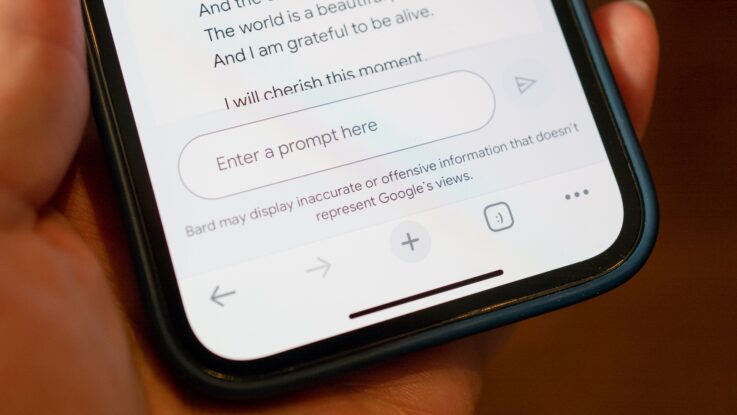

When Google’s artificial intelligence chatbot Bard recently began asking for precise geolocation information, it may not have seemed unusual to many based on the tech giant’s propensity to want to know as much as possible about everyone.

Google’s suite of products such as maps and search also prompt users to give up this kind of information. But privacy experts caution that the request from its AI chatbot represents a growing creep in data collection by large language models that could lead to an array of potential privacy harms.

“There’s a whole host of reasons to be concerned about the security of location data and its implications for the privacy of users of the system,” said Sarah Myers West, managing director at the AI Now Institute, a research institute that studies the social implications of AI.

That includes the potential subpoenaing of that data by law enforcement, a concern that has become especially pronounced in connection togrowing worries about how law enforcement may access geolocation data in cases criminalizing access to abortion. The abuse or breach of geolocation data can also lead to other harms, such as stalking.

Concerns about sharing location data with AI models speak to the “wild west” nature of a rapidly growing industry that is beholden to few regulations and largely opaque to consumers and lawmakers. Consumer technologies such as Bard are less than a year old, making it largely unclear what repercussions they could have for privacy down the line.

The rapid growth in the industry has left regulators in the U.S. and abroad sorting out how the technology exists under current privacy regulations. OpenAI, a leader in the field, landed in hot water in Italy earlier this year after the country’s data protection authority accused it of violating the EU’s data protection rules. More recently, U.S. regulators have warned AI companies “sprinting” to train their models on more and more data that existing consumer protections still apply and failing to heed them could lead to enforcement.

According to Google’s privacy policy for Bard, Google uses the data it collects — including information about locations — “to provide, improve, and develop Google products and services and machine learning technologies, including Google’s enterprise products such as Google Cloud.”

“Bard activity is stored by default for up to 18 months, which a user can change to 3 or 36 months at any time on their My Activity page,” Google said in a statement. Google’s privacy policy says it may share data with third parties including law enforcement.

OpenAI’s privacy policy said that it may share geolocation data with law enforcement, but it’s not clear in ChatGPT’s user policy if or in what circumstances ChatGPT collects this data. OpenAI did not respond to a request for clarification.

Precise geolocation data isn’t the only form of location data that companies collect. For instance, Bard as well as its competitor OpenAI’s ChatGPT also collects IP address data, which reveals geolocation information but not precise physical locations.

However, detailed geolocation data is “substantially more sensitive,” because it can be used to track your exact movements, explains Ben Winters, senior counsel at the Electronic Privacy Information Center, a nonprofit advocacy group.

Location data is considered so sensitive that some members of Congress have sought to ban the sale of location data to data brokers. In the wake of the Dobbs decision that overturned Roe v. Wade, Google itself pledged to wipe location data from Maps users’ visits to reproductive health clinics though recent reporting shows deletions have been inconsistent.

Sharing any form of personal data with generative AI models can be risky, experts say. In March, OpenAI took ChatGPT offline to fix a bug that allowed users to view prompts from other user’s chats and, in some cases, payment-related information of subscribers. It’s also unclear in many cases where data used for training large language models could end up or if it might be regurgitated to other users down the road.

Major companies including Apple and Samsung have restricted their employees’ use of the tools over fear that it could result in the leak of trade secrets. Leading lawmakers have also pressed AI companies on what steps they take to secure sensitive user data from misuse and breaches.

“This technology is fairly nascent,” said Myers West from the AI Now Institute. “And I just don’t think that they themselves have fully dialed in the privacy-preserving capabilities.”

In response to some of these concerns, OpenAI in April introduced data control features for users, including allowing them to turn off chat history. Google Bard also gives users the option to review and delete their chat history.

Experts note that Google’s grab at geolocation data also plays into competition concerns around the AI industry in that big tech companies will be able to use the burgeoning generative AI market to even further bulk up data collection on consumers to get a competitive edge.

“I think that there is a trend toward increased density of data collection,” said Winters, noting that Google’s move could give the rest of the industry a reason to ramp up data collection.

Winters said users should be cautious about models that collect anything beyond their input and account information and those that say they use the data for any purposes beyond providing an output or potentially improving the model.

While regulations around large language models are still nascent, some regulators have already issued warning shots to the industry to heed.

The post AI chatbots want your geolocation data. Privacy experts say beware. appeared first on CyberScoop.