The new executive order on artificial intelligence (AI) signed by US President Joe Biden outlines how the industry needs to ensure AI is trustworthy and helpful. The order follows high-profile discussions in July and September between AI companies and the White House that resulted in promises about how AI companies will be more transparent about the technology’s capabilities and limitations.

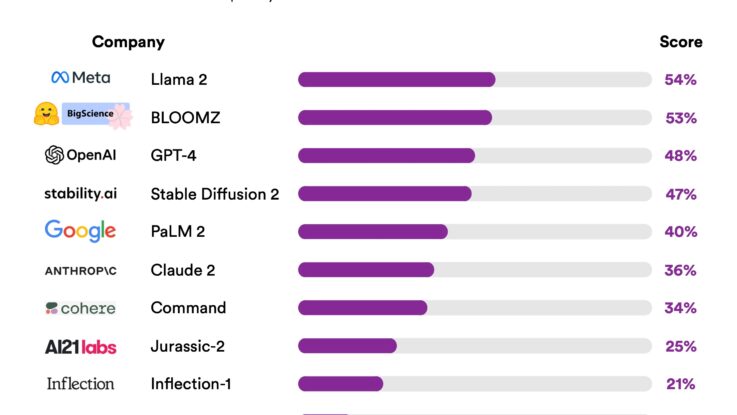

Promising to be transparent is a good step forward, but there needs to be a way to measure how well those promises are being kept. One method could be the Foundation Model Transparency Index developed by Stanford University’s Center for Research on Foundation Models. The index graded 10 AI models against 100 different metrics, including how the models are trained, information about the model’s properties and functions, and how the models are distributed and used. The scores were calculated based on publicly available data – although the companies were given the opportunity to provide additional information to change the score.

What does transparency look like when talking about some of the widely used foundational models? Not great.

“No major foundation model developer is close to providing adequate transparency, revealing a fundamental lack of transparency in the AI industry,” Stanford researchers wrote in the summary of their findings.

Meta’s Llama 2 received the highest total score on the index, at 54 out of 100.

OpenAI’s GPT-4 scored 48 – which is not surprising since OpenAI made the decision to withhold details “about architecture (including model size), hardware, training computer, dataset construction, [and] training method” when moving from GPT-3 to GPT-4 earlier this year.

For the most part, powerful AI systems – those general-purpose creations like OpenAI’s GPT-4 and Google’s PaLM2 – are black-box systems. These models are trained on large volumes of data and can be adapted for use in a dizzying array of applications. But for a growing group of people concerned by how AI is being used and how it could impact individuals, the fact that little information is available about how these models are trained and used is a problem.

There is a bit more transparency around user data protection and the model’s basic functionality, according to the researchers. AI models scored well on indicators related to user data protection (67%), basic details about how their foundation models are developed (63%), the capabilities of their models (62%), and their limitations (60%).

The new executive order describes several things that need to happen to improve transparency. AI developers will need to share safety test results and other information with the government. The National Institute of Standards and Technology is tasked with creating standards to ensure AI tools are safe and secure before public release.

Companies developing models that pose serious risks to public health and safety, the economy, or national security will have to notify the federal government when training the model and share results of red-team safety tests before making models public.